Apple Pauses Plan to Scan Photos for CSAM

The move comes after the company faced widespread backlash.

After facing a wave of backlash from experts and the public, Apple announced that it has paused its plan to monitor its user’s devices for child sexual abuse material (CSAM).

The move comes less than a month after the tech company first publicized its anti-CSAM software.

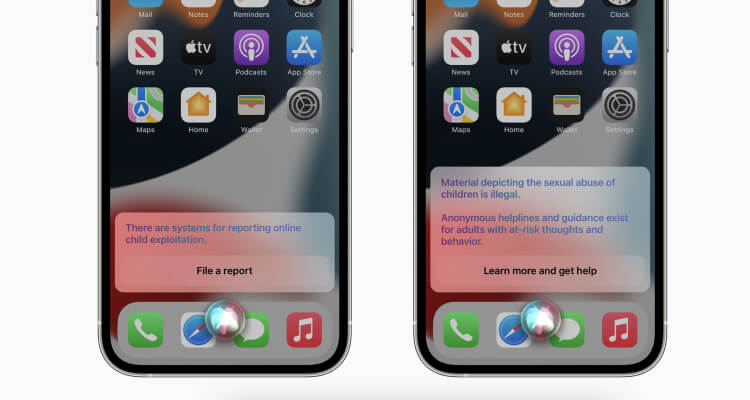

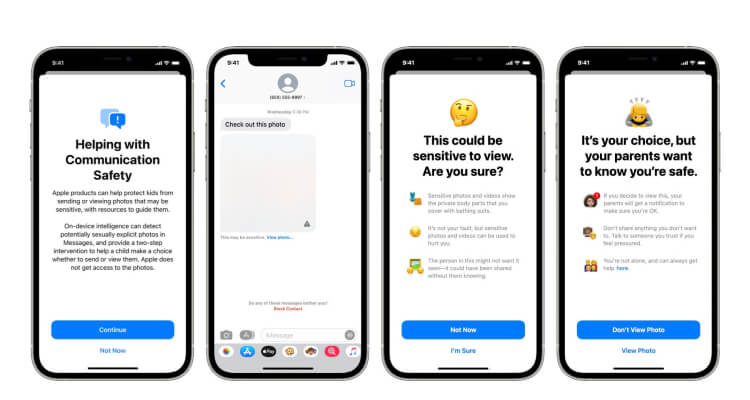

“Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material,” the company said. “Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

A nuanced security issue

Chris Hoofnagle, Professor of Law in Residence at the University of California, Berkeley, believes that Apple’s plan is imperfect, but practical.

“Our primary pursuit should be human freedoms, with technology in service of that freedom,” Hoofnagle said, “Apple’s approach attempts to right these priorities.”

Edward Snowden, on the other hand, wrote a passionate blog post opposing the plan. “As a parent, I’m here to tell you that sometimes it doesn’t matter why the man in the handsome suit is doing something,” he wrote, “What matters are the consequences.”

Snowden sees CSAM surveillance as a dangerous step towards government surveillance.

Image source: Apple