Sexbot Perspectives: Robots Are Potential Tools to Study and Treat Sexual Behavior

Could sex robots increase human empathy?

As part of our ongoing “Sexbot Perspectives” series, we’ve asked several experts this question: What is the potential or the possible pitfalls of developing sex robots? Our aim: To create dialogue and help shape the best possible future—one that will be deeply influenced by breakthroughs in artificial intelligence and robotics.

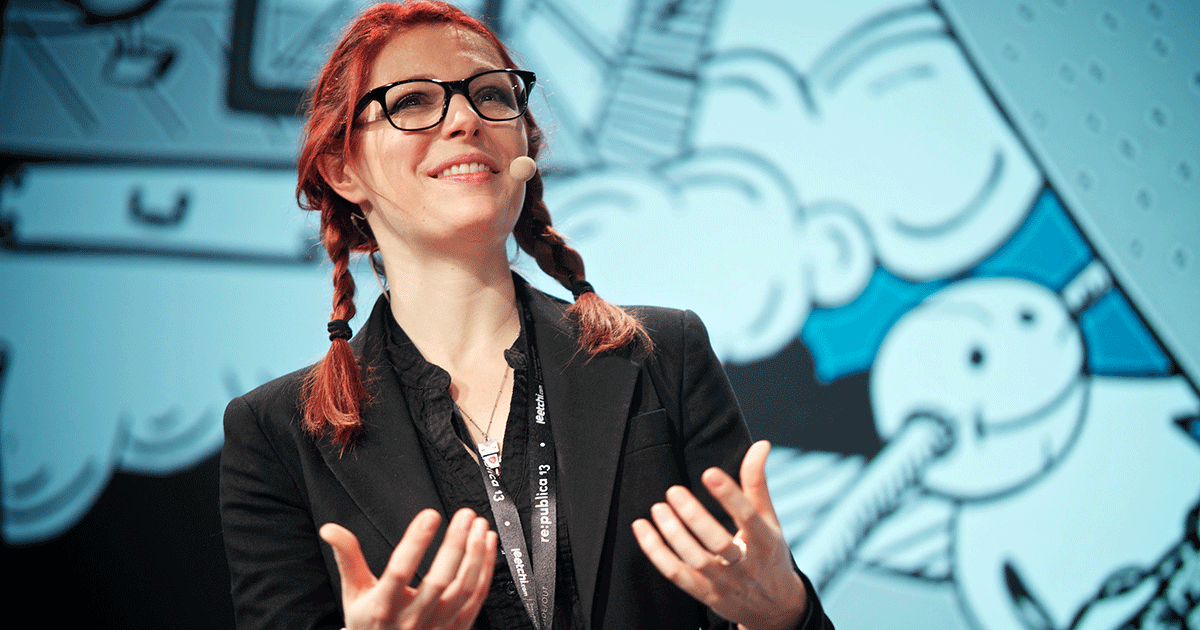

Kate Darling, a self-proclaimed “Mistress of Machines,” researches robot ethics and human-robot interaction at MIT Media Lab. Her main interest is using machines to explore violence and empathy.

This timely subject includes studying anthropomorphism: the tendency for people to project lifelike traits onto non-human entities, and, as a result, relate emotionally to them.

At speaking events, Darling admits to loving robots more than just about anyone. Studying them is more than a career—it’s a passion. So I’m happy to share Darling’s response to Future of Sex on the potential and possible downsides of sex robots.

[box type=”shadow”]

Robots are interesting because sometimes our subconscious treats them more like living things than machines. That means sex robots have the potential to be tools that help study sexual behavior, or to be used therapeutically. They also have the potential to provide an outlet for socially undesirable sexual urges.

On the other hand, it would be a pitfall if they end up encouraging and strengthening those urges (we don’t know if that’s the case). There’s also the question of entrenching gender biases and stereotypes in robotic design that could be harmful to both sexes.

Some have called for a preemptive ban on sex robots. I think that’s ridiculous.

[/box]

Robots: friends or foes?

Darling acknowledges the potential dark side of integrating lifelike robots into everyday life—and not simply ones designed for sexual pleasure. At a 2015 conference in Sweden, she raised concerns about replacing human care with robots and deceiving people to believe they are alive.

Then there’s the issue of privacy and data security. She also brought up the potential risk of emotional manipulation by people who sell robots and their software. Would it be fair to include in-app purchases or a mandatory $10,000 update?

While there may not yet be clear answers, Darling told the audience that these are issues we need to think about. But they should be addressed with the recognition that robots will be incredibly useful to society.

“I don’t want to throw the baby out with the bathwater. I think we can talk about privacy and consumer protection, and all of the ethical issues, without dismissing the potential of the technology,” she said.

Death of the dinosaur

One of the main arguments against sex robots is that they will harm how humans treat one another. There’s particular concern they will promote rape and the objectification of women and children.

In contemplating this view, it’s interesting to consider what Darling discovered in her Pleo experiment. Pleo is a very realistic dinosaur robot that costs $500 and is about the size of regular stuffed animal. It’s quite cute—if you are into robotic reptiles. And if you don’t treat it nicely, it will screech and show other lifelike signs of distress.

For her experiment, Darling gave five Pleo toys to different groups of people. She told them to name the robots and play with them. Then she asked the groups to torture and kill the Pleos. Darling described what happened next as “dramatic.”

People outright refused to hurt their Pleos. Darling and her team tried to coax them into destroying them anyway, and, in the end, only one Pleo was ruined. Most participants had become too attached to their mechanical pets to cause them harm.

According to Darling, we humans already relate emotionally to objects. For instance, people love their phones, their cars, and, as many parents with young children can tell you, their stuffed animals. However, the effect is more intense with robots because they move and, in some cases, are designed to emotionally respond.

Case in point: public outcry to a Boston Dynamics video of people kicking a dog-like robot. The blows were meant to demonstrate the robot’s stability. Nonetheless, PETA received so many complaints it released this statement to CNN:

[box type=”shadow”]

PETA deals with actual animal abuse every day, so we won’t lose sleep over this incident,” the group said. “But while it’s far better to kick a four-legged robot than a real dog, most reasonable people find even the idea of such violence inappropriate, as the comments show.

[/box]

Human empathy toward robots is apparent in these examples. Yet Darling wants to do more than measure people’s emotional responses to them. She wants to know how our ability to feel empathy can change because of robots.

Could kicking the Boston Dynamics robot desensitize someone so they don’t have qualms about kicking an actual dog?

Could we use robots to encourage empathy in people, including children and prisoners?

Another experiment Darling did with smaller insect-like robots revealed that people with already low empathy for others easily smashed them. On the other hand, people with high empathy for others were more apt to view the small robots as having human qualities.

The findings don’t conclude that using sex robots would cause people to act better or worse toward other humans. But they do show there is much to be explored in how people of different backgrounds and empathy levels could be affected by them.

Speaking of robots generally, Darling said, “I don’t think that robot ethics is actually about robots. I think that it’s about humans. I think that it’s about our relationship with robots, but mainly robot ethics is about our relationship with each other.”

Image source: re:publica

Leave a reply

You must be logged in to post a comment.