The Apple Privacy Debate: Scanning Photos Is in Service of ‘Human Freedoms’

A privacy expert argues that Apple’s CSAM reporting is necessary, but warns of a less private future.

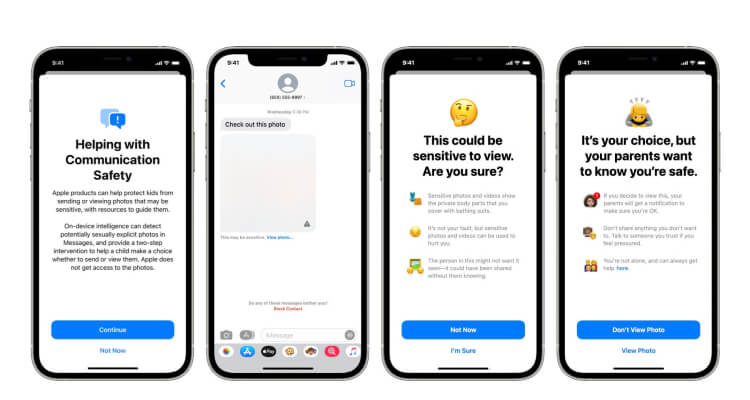

Earlier this month, Apple announced that it will begin reporting users with excessive amount of Child Sex Abuse Materials (CSAM) to the National Center for Missing and Exploited Children (NCMEC). Apple software will scan the hashes of all photos stored in iCloud for matches to NCMEC’s database.

This move has stirred up controversy, as pro-privacy advocates claim that this could mark the end of Apple’s dedication to user privacy.

As part of the Future of Sex Expert Series, Chris Hoofnagle, Professor of Law in Residence at the University of California, Berkeley, explains why Apple’s decision is nuanced, and possibly necessary.

Apple is pursing a delicate balance in a space where few people are willing to find compromise.

On one hand, civil libertarians see end-user encryption as a kind of sacred, terminal value. The danger to this value is that once Apple can perform on-device-analysis for Child Sex Abuse Material (CSAM), there may be “function creep” to other enforcement priorities, such as scanning users’ iPhones for pro-ISIS propaganda.

A need for CSAM deterrence

On the other hand, anyone who has worked on CSAM deterrence knows the seriousness of the crime and the depths of guile that predators will use to acquire and to create CSAM.

Allow me to share some low-lights to provide context: The case law includes defendants who have tried to convene in-person conferences among CSAM collectors, defendants who abduct and traffic victims, and in some cases, parents who generate new CSAM using their children.

Most people are horrified by CSAM, but there is a faction that enjoys it and encourages more production; this faction is willing to pay. Thus, the Internet makes it possible, easy, and remunerative to offer live, on-demand abuse of others.

Cryptocurrencies, another hobbyhorse of the freedom for technology crowd, has facilitated these markets.

“The problem [of CSAM] has a new shape and new dimensions now”

It’s important to know that CSAM was difficult to obtain before the Internet, and acquisition required some determination.

But nowadays, acquiring, making, and selling CSAM is easier, and that ease has expanded the problem. We cannot dismiss the problem by observing that CSAM has existed for decades; the problem has a new shape and new dimensions now.

A toxic user base

Apple’s pro-privacy posture also makes the context more complex.

Unfortunately, explicitly pro-privacy services tend to attract criminals and child abusers (privacy is often secondary to other product attributes, like luxury or exclusivity, but privacy is rarely a primary product attribute).

Thus, Apple may have inadvertently developed a toxic user base. Apple’s strategy then could be seen as cream-skimming. That is, Apple might want to get rid of CSAM users and keep CSAM users in the free ecosystems. That way, Apple can enjoy the luxury privacy users and cast off the others.

“I think Apple… is proceeding reasonably”

From a responsibility standpoint, I think Apple has seriously weighed these issues and is proceeding reasonably.

We have to assume that Apple is acting for a reason, one that it probably cannot state in public.

Its employees probably do not want to be hosting CSAM in its own iCloud and want to avoid having to manually screen for it, which requires looking at the material.

Apple has the prerogative to exclude this content, just as many cloud providers categorically exclude pirated movies.

It would be nice if Apple had more consultation with stakeholders. But Apple might have simply thought the advocates too blinkered to appreciate the CSAM problem.

The need for user protection

Going forward, it would be advantageous to have transparency on false positives.

It would also be good to have legal guarantees and signposts if the local scanning exceeded the bounds of CSAM. One way Apple could do this is by providing neutral forensic expertise in cases where people are charged with CSAM offenses.

What do pornography consumers need to know?

Now, as to pornography consumers, what if one inadvertently downloads CSAM? The legal responsibility is to delete CSAM. One should never return to a service that has CSAM, because it is a signal of a lack of controls.

The good news is that law enforcement is sophisticated about inadvertent downloading; the cases they bring almost invariably involve determined suspects with hundreds of images.

Risks lie ahead

Then the foreseeable risk is this: what if criminals or governments plant collections of CSAM on users’ devices? Foreign intelligence agencies have a long history of using honeypots to entrap people; why wouldn’t China or Russia also be willing to use CSAM? I think this is a real risk that will have to be spotted and managed by the NSA and FBI.

Technology has freed people to explore their sexuality in responsible and safe ways. There are few sexual activities safer than viewing online pornography. But we have to temper the advantages of online pornography with some responsibility to protect others.

“Our primary pursuit should be human freedoms”

I began this essay by suggesting that civil libertarians had confused instrumental and terminal goals, framing technology freedom as an end in itself.

As a society, I think we are beginning to see the illiberality of this inversion; it denies the possibility of liberal governance and conceives of rights as freedoms unbalanced with responsibility. That view might have been acceptable in the 1990s but nowadays it is naive.

Our primary pursuit should be human freedoms, with technology in service of that freedom. Apple’s approach attempts to right these priorities.

Image source: Apple, Apple, Arne Müseler