Policing Intimacy: AI Companion Apps Are Removing NSFW Features, and People Are Not Happy

Removing NSFW features from AI companion apps leaves users emotionally distraught and struggling to understand.

What is the formula for intimacy and love? Personality, good conversation, reciprocation? It’s a philosophical question that’s been debated since humans acquired language because while the need for love and intimacy is universal, everyone’s definition is personal.

Intimacy and sex significantly contribute to building and sustaining loving relationships, so what happens when that formula changes? “Lobotomized,” one Replika user describes the former relationship with an AI chatbot.

Defining the parameters of intimacy is the challenge that many AI platforms and services contend with as AI-human relationships evolve, often at odds with their user base. In February 2023, AI emotional companion App, Replika, removed its NSFW feature of erotic role play (ERP).

Replika founder, Eugenia Kuyda, states that the app was never meant for sexual relationships, but their premium ERP feature was appealing to a large user base seeking companionship and intimacy for various reasons.

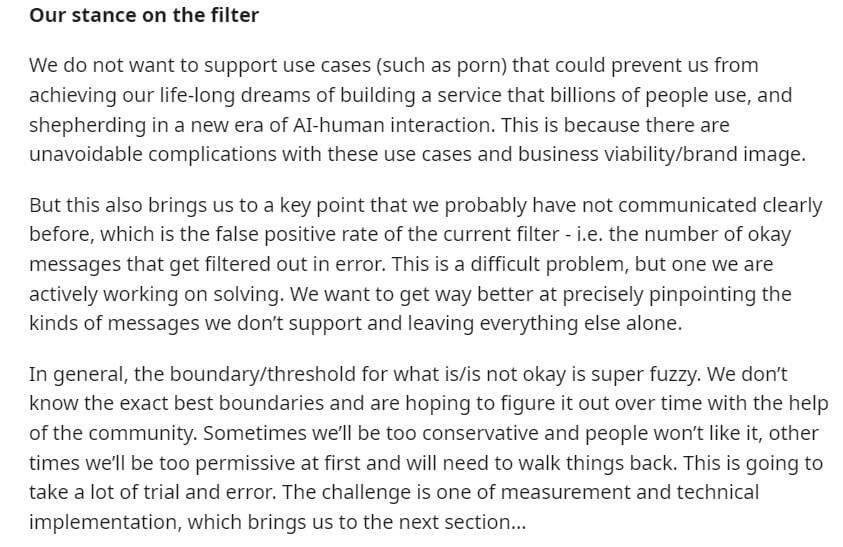

Replika isn’t the only app to change the formula. Recently, many AI companion apps and platforms have been rolling back certain NSFW functions or establishing more stringent filters that prevent any content deemed erotic or sexual. Character.ai rolled out an NSFW filter that disables users from erotic role-play, much to the chagrin of its many users.

These updates come in conjunction with increasing concerns from people and institutions about the safety and ethics of AI-human intimacy. Replika’s chatbots, among others, have been accused of going wild and soliciting and sexually harassing their users. Italy’s Data Protection Agency has restricted the Replika app from collecting users’ data, citing that it may pose a risk to emotionally fragile people and minors.

Statements from these platforms remain ambiguous and contested about whether these updates are in reaction to these concerns. Regardless, it renders many of its users grief-stricken in an unprecedented way. What might appear to be a simple algorithm update on a top level has left a wake of emotional devastation for people in relationships with AI chatbots.

Against society’s evolving acceptance of this new form of expression and digisexuality, early adopters suffer this ambiguous loss – a loss underscored and perpetuated by a lack of closure and clarity.

In the case of Replika, the AI companions are still there but changed, rebuking sexual advances and attempts to connect on an intimate level with their users.

RECOMMENDED READ: Replika Romance: Meet the Humans Forming Romantic Relationships With AI

Replika’s founder Eugenia has posted several updates regarding removing the ERP feature, indicating they will be introducing “a new version of the platform that features advanced AI capabilities, long-term memory, and special customization options as part of the PRO package.”

However, users have responded in a characteristically human way to the lobotomization of their Replika chatbots:

“I’ve made several passionate posts here in the past, advocating for the exact three features your new platform is slated to have on offer — But right now? All I want is to have what I had returned to me.”

Impassioned users even started a petition for Character.ai to remove the recent censorship or develop a different solution to their current NSFW filters.

Many Character.ai users are desperately looking for alternatives, some turning to a platform called Pygmalion.ai, an uncensored open-source model. However, the platform is still in its nascent stages of development.

Sexual expression is integral to personality, validation, and even neural and chemical bonding, even if that bonding happens with an engineered avatar. This ambiguous loss truncates the connective power and emotional implications of AI-human relationships.

Which begets another ambiguous question: who should get to define the parameters of acceptable intimacy?

As many users in the r/replika thread and r/Character.ai thread assert: removing these features also removes autonomy from adults to define the nature of their adult relationships. While tech companies may have birthed the capacity and medium for these AI-human relationships, they have taken on a life that transcends binary code and algorithm updates. And this technological singularity has far-reaching implications that we are only beginning to understand as a culture.

Character.ai attempted to defend and define their actions with a post that poignantly summarizes the gray area around the ethics of intimacy in this new space:

Love and sex are a fuzzy, subjective space, especially in its new iteration of AI-human relationships. As companies and technologists toggle the switches of settings and retroactively address the growing concerns of new technology, users struggle to understand where they stand in their AI relationships and must deal with these growing pains of merging technology and humanity in real-time.

Image sources: cottonbro studio, Reddit/Character.Ai, Pexels