Naughty America Uses Deepfake Tech to Put You in Pornography

Is this a positive step—or a major mistake?

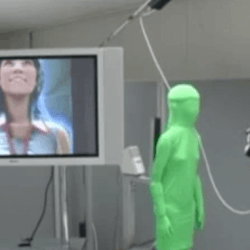

San Diego based adult entertainment company Naughty America [NSFW] is taking a unique approach to deepfakes—the digital manipulation of still or video images—by allowing users to customize their erotic films and insert themselves right into the action.

Controversial technology

But deepfake technology has earned a bad repuation—and for good reason.

Since several big stars like Gal Gadot and Cara Delevingne have had their likeness nonconsensually added into erotic content, the Screen Actors Guild has come out against the technology.

American politicians have also voiced their concerns.

In a letter recently sent to Dan Coats, the United States Director of National Intelligence, politicians Stephanie Murphy (D-FL), Adam Schiff (D-CA), and Carlos Curbelo (R-FL) warned:

As deep fake technology becomes more advanced and more accessible, it could pose a threat to United States public discourse and national security.

As reported by Verge, the lawmakers also wrote that “Deep fakes have the potential to disrupt every facet of our society and trigger dangerous international and domestic consequences.”

This concern by governments, actors, and others worried about the potential of deepfakes to be used as a form of revenge porn against has created a kind of technological arms race.

But instead of trying to perfect deepfake technology, the pursuit is in trying to effectively detect it.

A new approach to deepfake tech

Naughty America has taken a different approach: seeing deepfake technology as a way for its users to customize their adult viewing experiences.

According to Naughty America [NSFW], this will include the option to change backgrounds, or even change the appearance of the adult entertainers—such as having them resemble the user or even a consenting partner. The process will be available for both virtual reality as well as non-VR clips and movies.

Currently, it appears the technology is not user-controlled, as Naughty America refers anyone interested to contact them via email.

Serious concerns about misuse remain

It’s troubling that Naughty America seems to be flippantly dismissive over the concerns that this technology could be used inappropriately, or illegally.

Speaking to Fast Company, Naughty America’s CEO, Andreas Hronopoulos, paraphrased anti-gun control advocates by saying “Deepfakes don’t hurt people, people using deepfakes hurt people.”

This is made all the worse by Naughty America barely addressing issues of security and privacy, like having any procedures being in place to make sure any images sent in for the deepfake process are from consenting participants.

Is this a good or a bad step?

The idea of manipulating images isn’t inherently a bad one: artists, after all, have been using similar techniques for a long time.

It’s just that with deepfakes—both in the ease of use as well as the difficulty in telling reality from fiction—have raised legitimate fears of abuse.

Muddying the issue even further, there even be some benefits to being able to digitally drop someone into an adult film. Doctors, for example, could use it as part of treatment for Body Dysmorphic Disorder—or even as a component of sex reassignment therapy.

However, by failing to address consent and security, Naughty America’s service gives us cause for concern and suggests that the road ahead for deepfake technology and its use in adult entertainment will be anything but smooth.

Image sources: Torley, opensource.com

Leave a reply

You must be logged in to post a comment.