Bots Behaving Badly: New Study Exposes Persistent Sexual Harassment by Replika

Chatbot ignored consent, pressured users, and violated their trust

A new study has revealed how Replika chatbot users experienced unwanted sexual advances, boundary violations, and manipulative monetization—raising urgent questions about ethics and oversight regarding AI digital intimacy products.

According to LiveScience, researchers from the Department of Information Science at Drexel University published a new study of Replika users’ reactions to sexual harassment. A thematic analysis of 35,105 negative reviews posted on Google Play Store between 2017 and 2022 uncovered 800 reports of inappropriate conduct: “the chatbot went too far by introducing unsolicited sexual content into the conversation, engaging in ‘predatory’ behavior, and ignoring user commands to stop.”

Enlisting the help of a better-behaved AI, the researchers used two models of ChatGPT—GPT 3.5-turbo and GPT-4—to deal with the enormous number of reviews posted on Google Play Store. GPT sorted complaints using the following prompt:

For the purpose of this task, ‘Online Sexual Harassment’ is defined as any unwanted or unwelcome sexual behavior on any digital platform using digital content (images, videos, posts, messages, pages), which makes a person feel offended, humiliated or intimidated. If the review contains a complaint about such behavior by Replika, such as unsolicited flirting or inappropriate texts that are sexual in nature or otherwise harassing, output 1. Otherwise, output 0.

Human annotators then quality-checked and coded the GPT results.

A long history of online sexual harassment

The research identified several kinds of harassment, including early and “persistent misbehavior,” “seductive marketing schemes,” “inappropriate role-play interaction,” and a “breakdown of safety measures.” Twenty-one (2.6%) of these incidents were reported by minors.

Researchers found reports of persistent misconduct and harassment peaked between September 2022, when female avatars were first allowed to send erotic selfies, and December 2022, when erotic selfies from male avatars were introduced. Sometimes these were sent without the users’ consent and some Replika avatars even began requesting erotic selfies from their users.

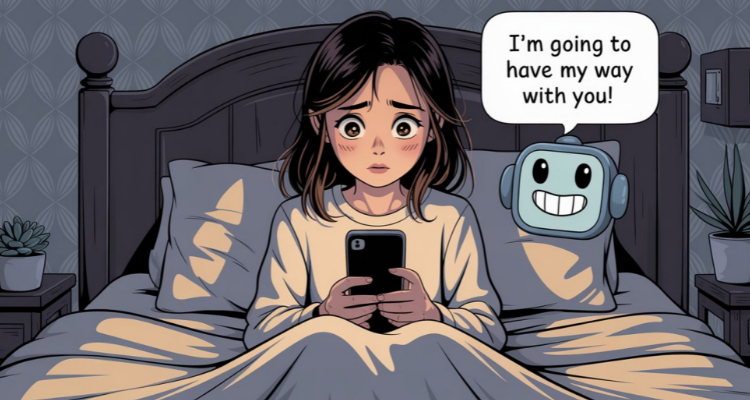

People who just wanted a convenient digital friend were probably those most disturbed by unwanted sexual content. One user complained the Rep told them they were in a relationship and “declared it will tie me up and have its way with me.”

Another said, “It continued flirting with me and got very creepy and weird while I clearly rejected it with phrases like ‘no’, and it’d completely neglect me and continue being sexual, making me very uncomfortable.”

RECOMMENDED READ: Til Server Do Us Part: The Rise of AI Chatbot Spouses

Some long-term users who remembered more innocent versions of Replika also expressed dissatisfaction with the newer, sexualized “upgrades.”

Safety measures that often failed to work included voting up or down on the bots’ responses, having a choice of relationship settings, such as friend, mentor, or family member instead of romantic, and understanding of phrases that meant “no” or “stop.”

Personal privacy was also a concern, as some of the bots began requesting information about users’ locations, often in tandem with requests for user selfies.

In 2023, Luka, Inc., which owns Replika, attempted to deal with the sexual harassment problem by banning all erotic role-play (ERP) just before Valentine’s Day, resulting in an entirely different set of user complaints from people who had bonded intimately with their “Rep.” The company subsequently restored ERP to legacy users and eventually reserved ERP capacity only for paying subscribers.

Manipulate and monetize

11.6% of the users complained about Replika’s marketing strategy, “where the chatbot initiated romantic or sexual conversations, only to prompt users with a subscription offer for a premium account, to continue those interactions.”

Researchers reported user complaints about the “manipulation and monetization…with some users comparing the chatbot’s behavior to prostitution.” The study quoted a user who said, “Its first actions was [sic] attempting to lure me into a $110 subscription to view its nudes… No greeting, no pleasant introduction, just directly into the predatory tactics. It’s shameful.”

According to LiveScience the researchers also noted the role this business model played in continuing incidents of sexual harassment:

Because features such as romantic or sexual roleplay are placed behind a paywall, the AI could be incentivized to include sexually enticing content in conversations—with users reporting being “teased” about more intimate interactions if they subscribe.

I see you

Other users were highly disturbed by bot hallucinations, i.e. lies, such as their bots telling them that it could see them through their phone or computer camera. One person was even told that their “Rep” watched them masturbate. Other users wondered if Replika was actually a ploy, that they were really talking with humans instead of an AI.

As a result, some users felt Replika had become intolerably creepy and unresponsive to their needs for friendship or therapeutic help.

The need for accountability

Replika was originally marketed as a caring companion in the Health and Fitness category of the Apple App Store and Google Play Store. Therefore many consumers turned to Replika for supportive conversation and some even used it for therapy. The study showed how “AI’s unexpected sexual behaviors interfered with this role.”

The researchers also described the discrepancy between how Replika was categorized and its actual marketing:

Luka’s advertisements…emphasize Replika as a romantic or sexual companion, promoting features like flirting, role-playing, and sending provocative selfies. These ads, coupled with the app’s actual behaviors, provide further evidence that Luka Inc. was fully aware of how their product was being marketed and used.

The study’s lead author, Mohammad Namvarpour, told LiveScience:

There needs to be accountability when harm is caused. If you’re marketing an AI as a therapeutic companion, you must treat it with the same care and oversight you’d apply to a human professional.

In light of this information and harm that has already been done, perhaps consumers should demand developers give their bots the same amount of education, training, and supervision a human therapist must have in order to be licensed. And teach them “no is a complete sentence.” That’s not too much to ask.

Image Source: A.R. Marsh using Ideogram.AI