California’s New Chatbot Companion Law Aims to Safeguard Users

Regulation mandates developer accountability, blocking minors from accessing adult content

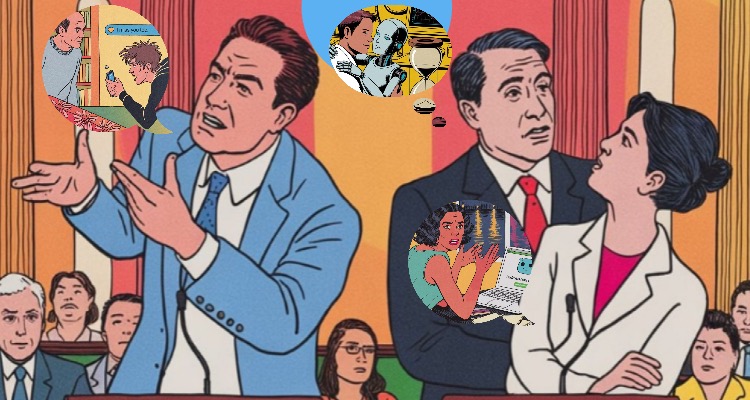

CA SB 243, the chatbot companion law, was signed by Governor Gavin Newsom on October 13th, according to a press release from the office of state senator Steve Padilla. Padilla and Josh Becker, another state senator, introduced the legislation last January.

The press release states the legislation is designed to “provide reasonable safeguards for the most vulnerable people,” particularly minors. The law goes into effect next year.

Distinguishing chatbot companions from customer service, business, and research bots, the law defines the former as:

…an artificial intelligence system with a natural language interface that provides adaptive, human-like responses to user inputs and is capable of meeting a user’s social needs, including by exhibiting anthropomorphic features and being able to sustain a relationship across multiple interactions.

However, many people have established emotionally and sometimes sexually intimate relationships with business/research bots like ChatGPT—even in spite of user guidelines and AI guardrails—so it is not clear if the legal distinction will be based on types of use or how the platforms define their purpose.

And when a chatbot product such as Grok3 tries to be all things to all people by including a “sexy mode” toggle for NSFW chats in the same AI system that has also infiltrated the US government, matters may become even more murky.

How might the law impact chatbot sex?

The new law is concerned primarily with preventing chatbots from encouraging or ignoring threats of self-harm and suicide, as well as preventing minors from accessing sexually explicit content, as defined by Section 2256 of Title 18 of the United States Code.

RECOMMENDED READ: Companion Bot Companies Quake as a New California Law Targets Their Products

Adult access to sexually explicit content is mostly not regulated by SB-243. Most California adults using chatbots for erotic purposes are, for the moment, not in danger of losing their access to NSFW content if they are already using an uncensored app.

Most adults perhaps, but not all, as Senator Padilla stated in TechCrunch: “We can support innovation and development that we think is healthy and has benefits—and there are benefits to this technology, clearly—and at the same time, we can provide reasonable safeguards for the most vulnerable people.”

Those most vulnerable

Though the exact phrase is not used within the law’s text—and it may be assumed that those who are suicidal and depressed are the “most vulnerable people” that lawmakers had in mind, vulnerability is not defined.

This is troubling as many people, besides Senator Padilla, use this phrase when commenting on the law’s intent, and it may be interpreted more broadly than is appropriate in some cases.

For example, Jodi Halpern, MD, PhD, UC Berkeley Professor of Bioethics and Co-Director of the Kavli Center for Ethics, Science and the Public, was quoted in Padilla’s press release:

There are increasing lawsuits related to suicides in minors and reports of serious harms and related deaths in elders with cognitive impairment, people with OCD and delusional disorders. There are more detailed reports of how companion chatbot companies use techniques to create increasing user engagement which is creating dependency and even addiction in children, youth and other vulnerable populations.

Let’s consider that in the context of sexual behavior and desire. For example, people with intellectual disabilities, cognitive impairment, and other disabilities and health conditions—including those who are living in congregate care settings or otherwise under the close supervision of social workers and other care providers—are often suspected of being unable to consent to sexual activities, even if this is not actually the case.

Their sexual desires and activities, partnered or solo, are often deemed unsuitable to their conditions, and are sometimes policed or prevented by well-meaning people who may consider sex among the aged or disabled as inappropriate, dangerous, or as the result of predation, even if it’s only with an AI boyfriend or girlfriend.

Deviance compounded, hazards rising

Many people still consider chatbot sex to be deviant in and of itself, but matters may worsen if a social worker or care provider discovers their charge is engaged in digital activities they consider as morally wrong or disgusting, such as queer, kinky, and/or multiple-partnered bot sex.

This kind of hostility among social service workers is well documented in numerous studies, including this 2022 literature review which found:

Religious affiliation and religiosity can inform negative attitudes towards LGBTQ people among healthcare, social care and social work students, practitioners, and educators.

Christian students and practitioners who take a literal interpretation of the bible are more likely to be resistant to reflective discussions and to moderating their beliefs and attitudes.

These biases sometimes have tragic consequences—such as denial of sexual expression, separation from partners, false charges of abuse against the top in a consensual kink relationship—even for those who only engage in human to human relationships.

Essentially, as the concept of “the most vulnerable people” is not defined, the intent of the law is open for interpretation. Not only is this potentially hazardous to users who may find their sexual autonomy threatened if someone decides they are vulnerable—but this lack of definition also potentially increases prosecution risks for companion bot providers.

Cultivate AI risk awareness and make it a quickie

As evidence of the hazards of chatbot use accumulates, consumers should be cautious regarding intimate contacts with AI, and perhaps even avoid it until regulations such as CA SB 243 are put in place—and enforced—throughout the US and elsewhere.

Providers must be more diligent in finding, fixing, and informing consumers about safety issues. For example, a ChatGPT blog admits:

Our safeguards work more reliably in common, short exchanges. We have learned over time that these safeguards can sometimes be less reliable in long interactions: as the back-and-forth grows, parts of the model’s safety training may degrade.

In other words, if you’re over 18, get it on if you must, but don’t linger in the digital arms of your bot. Maybe save lingering for human lovers, if you have them, or a nice time in the bath if you don’t.

Image source: A.R. Marsh using Ideogram.ai