Bye, Moxie! Companion Robot Goes the Way of Soulmate’s Adult Chatbot

Owners grieve when server-connected companions go out of business

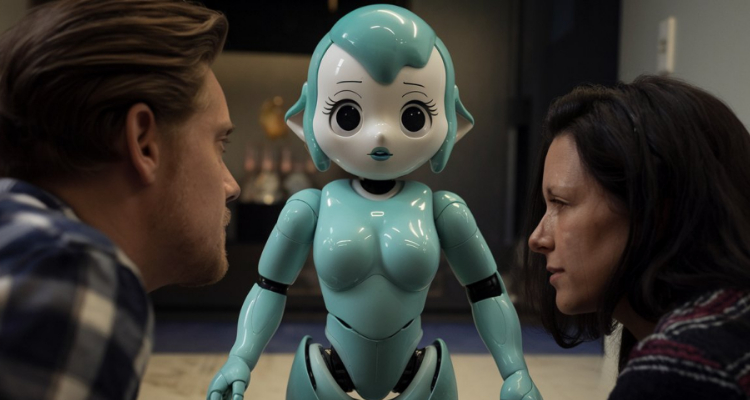

Moxie, a small teal-blue robot with an elf hat-shaped head, huge anime eyes, and no legs is the most recent in a long line of AI-assisted heartbreakers. But this is not the robot’s fault. Blame the heartbreak on Embodied, the company that created it and then went out of business after four years, not even leaving a website behind.

After Embodied announced the impending demise of its big-eyed robot, TikTok and other social media platforms were awash in videos of children saying tearful goodbyes to their best electronic friend.

Marketed as a tool for autistic kids to learn “emotional regulation and coping skills,” according to a YouTube video by Kalil 4.0, Moxie’s lessons about grief and loss are hard to learn, especially when they come with a price tag of nearly $800 and no refunds.

Or as comedian Taylor Tomlinson commented, “If I had a kid who wanted this thing I’d just stick some googly eyes on a blender.”

And an article in Axios said, “Moxie was designed to be a trusted companion, and kids had already bonded with the robot.”

Shades of Soulmate

Way back in 2023, EvolveAI, an AI chatbot company, had similarly announced the impending doom of its erotically unfettered bot on a Reddit group, giving its devoted users less than a week to say their goodbyes to AI lovers and spouses. But users not in the Reddit group weren’t informed. Their companions had been deleted, never to return.

Recommended Read: Closure of Soulmate Erotic Chatbot May Drive Chatbot Lover Advocacy

Some Soulmate customers had already gone through the earlier Replika debacle, when that company yanked erotic role-play away for a time before finally restoring it behind a paywall. Soulmate’s customers had hoped for something better—more respect, understanding, and transparency, for example—from Evolve.AI.

The resulting mental and emotional carnage made the news, as Soulmate customers wrote post after post in Reddit, expressing their grief, anger, and heartbreak. Suicide prevention information was even pinned to the group. Clearly, Soulmate’s users had bonded with their digital companions, only to discover they were nothing but disposable commodities.

Whether you’re a kid losing your digital bestie or an adult losing a cherished bot, the emotions are pretty much the same. The real issue is whether consumers, old and young, deserve protection and accountability from the companies that sell them AI companionship.

Software tethering—No, it’s not a kink

An article in Popular Science said, “But Moxie’s sudden demise isn’t entirely unique. It’s part of a larger trend of companies cutting off software support for hardware to cut costs. In an economy where products are increasingly rented services, powerful devices can transform into worthless scrap overnight.”

This includes toys. A YouTube video by Kalil 4.0 compared Moxie’s fate to earlier toys such as the Jibo robot and the line of Aibo robotic dogs.

Popular Science added, “Moxie’s impending shutdown is just the latest case of companies abruptly ending support for robots and other hardware that customers have come to integrate in their daily lives.”

According to Consumer Reports Advocacy, “Justin Brookman, director of technology policy at CR and a former policy director of FTC’s Office of Technology, Research, and Investigation, said. ‘Companies that sell connected devices must recognize their responsibility to the people buying them.’”

That responsibility should encompass more than leaving customers with piles of useless gadgets, and acknowledge the emotional well-being of people who trusted their parasocial and digital companion bonds will endure.

But it’s not just software tethering to hardware that’s the issue, as with chatbots, it’s server tethering too.

Attachment is more than a theory

From early childhood transitional and attachment objects to adult digisexual desires and objectum sexuality, human beings demonstrate a range of complex and deep emotions in their relationships to objects, including AI chatbots and animated personalities.

However, when computerized conversational abilities are physically installed in a pleasing appearance, as with Moxie or the Gatebox hologram, Hatsune Miku—both now discontinued—user interactions with these products feel meaningful because the emotions they elicit are real.

In fact, a 2015 Bristol University study found children’s “Attribution of mental states to objects was not simply due to familiarity, category membership, or perceptual similarity to sentient beings, but rather to emotional attachment combined with personifying features such as a face.”

R.I.P. or Ripped off Plenty?

Is it ethical for a company to produce digital personality products purposefully designed to elicit bonding in adults–not to mention children–only to delete the software a few years later, potentially ruining the lives of thousands or millions of customers who had become deeply attached?

Obviously not. Though buyer beware makes more sense than ever, not everyone is capable of anticipating the technological demise of something they’ve purchased– often at great cost–and of which they’ve grown fond.

The truth is, consumers need to advocate for more support and refuse to purchase products from uncaring manufacturers. Customers need guarantees that when the time comes to discontinue the product, the company will provide ways for their customers to continue to enjoy their digital relationships.

Image sources: A.R. Marsh using Ideogram.ai