AI Dungeon’s New Moderation Policy Raises Privacy Concerns

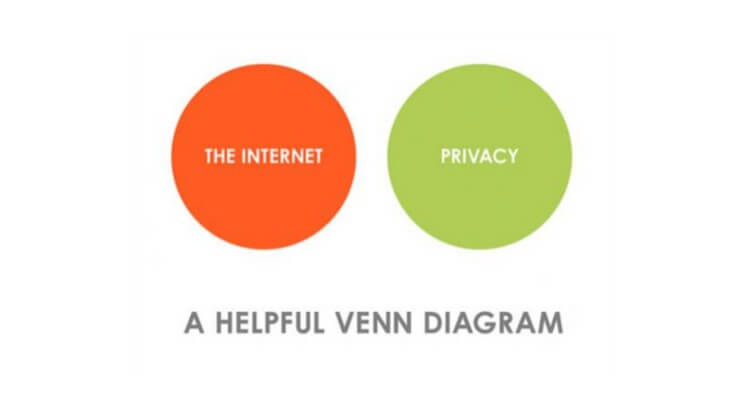

In an attempt to root out child pornography, the platform exposed law-abiding users to privacy risks.

Users of the popular text adventure system AI Dungeon have unearthed a potentially troublesome use of content moderation software on the platform.

AI Dungeon community members first began asking questions when they noticed their content being flagged as inappropriate more frequently than usual.

AI Dungeon’s automated content-moderation system

In a blog post, Latitude, the development team behind AI Dungeon, said that they had “built an automated system that detects inappropriate content.”

The system was built to flag sexually explicit content that involved minors. Latitude claims that it was largely successful, and blamed “technical limitations” for wrongfully-flagged content.

Distrust among the AI Dungeon community

Members of the community have responded by pointing out that, while the game supports multimedia play, Latitude is overstepping its authority by accessing and reporting games that can only be played by individual users.

What is AI Dungeon?

AI Dungeon is an online platform that allows anyone to assemble a text-based adventure game. The platform relies on text-generation technology from OpenAI to create constantly-evolving worlds for players to participate in.

To play, users must first select a setting, such as fantasy, apocalyptic, zombies, or mystery. Next, they next input critical parameters that are related to their chosen setting.

After enough information is provided, AI Dungeon’s software takes over to fill in the blanks, or even assemble a unique adventure all on its own.

Perhaps inevitably, the open-ended platform has enabled many users to create sex-based games. One hacker revealed that up to half of AI Dungeon’s content is possibly porn.

Drawing the line at explicit content involving children

In March of this year, OpenAI and Latitude discovered that the platform was being used to create fictional, text-based sexual encounters with children. In response, Latitude implemented the AI content-moderation system without warning the AI Dungeon community.

Any stories that are flagged by the AI system are then further reviewed by Latitude employees. This has raised privacy concerns among AI Dungeon users who write explicit content that follows the community guidelines.

Although the AI was employed only to moderate explicit content with children, its reach has been far more broad.

Acceptable content is getting flagged

On Reddit, an AI Dungeon user reported a non-erotic game receiving a flag for simply using an expletive. Speaking to Motherboard, this person shared that this wasn’t their only run-in with the platform’s moderation system.

“Another story of mine featured me playing as a mother with kids, and it completely barred the AI from mentioning my children,” the Reddit-user said, “Scenarios like leaving for work and hugging your kids goodbye were action-blocked.”

Privacy concerns for law-abiding players

AI Dungeon’s base and the privacy advocates who are following the story are particularly upset by the flagging of single-player experiences that were made exclusively for private, unshared use.

In the same Motherboard article quoted above, Latitude is shown to have stated on their Discord that if a game was found to be inappropriate by a moderator, human moderators “may look at the user’s other stories for signs that the user may be using AI Dungeon for prohibited purposes.”

This has angered some AI Dungeon users, who now feel that they have no guarantee of privacy on the platform.

AI Dungeon’s role as a safe space

In the past, AI Dungeon was a safe place where users could experiment, play, be outrageous, and in some cases put together interactive adventures to help explore their sexuality.

This use of AI Dungeon to process emotional issues around sex is another reason why Latitude is on the receiving end of so much bad publicity.

After all, it’s one thing to post something to a social media platform for all to see but the experience is quite different when it’s something for your own, exclusive enjoyment.

And when that content is deeply personal, often involving sexual issues, to find out that someone could access it without your permission at any time is a textbook emotional violation.

“Exposed and humiliated”

Reddit-user CabbieCat expressed this perfectly to Motherboard, saying that Latitude is making its previously loyal fanbase “feel exposed and humiliated.”

He explains further, saying, “This isn’t a child having their toys taken away nearly so much as it is suddenly revealing there was a hidden camera in the bedroom all along.”

A complex problem requiring thoughtful consideration

The situation with AI Dungeon is hardly unique, as content platforms and social media appear locked in a perpetual struggle between providing their users with a place to make whatever they want and the harsh reality that it may not always be appropriate.

If there’s anything that’s clear about this situation it is that Latitude could have handled the situation better.

I suggest a lot of this could have been avoided if Latitude had been upfront with their community. Or, to take it a step further, if the company had worked with the AI Dungeon community, rather than in opposition to them.

This has proved to be an example of what happens when a site fails to understand and accept why people use their platform—and how trust can be hard won and then so easily lost.

Image Sources: Josh Hallett, Book Catalog, Bernard Goldbach, Mike Lawrence, Rustam Gulav, purplejavatroll